In a talk at the Complexity Science Hub, Ricardo Baeza-Yates underscored the need for humans to take charge

How can we ensure we’re using artificial intelligence responsibly? What can we do to minimize the risk of harm to people directly or indirectly? The first step is to be aware of what you’re doing, and to keep in mind that humans are in charge, and not in the loop, according to Ricardo Baeza-Yates, director of research at Northeastern University’s Institute for Experiential AI.

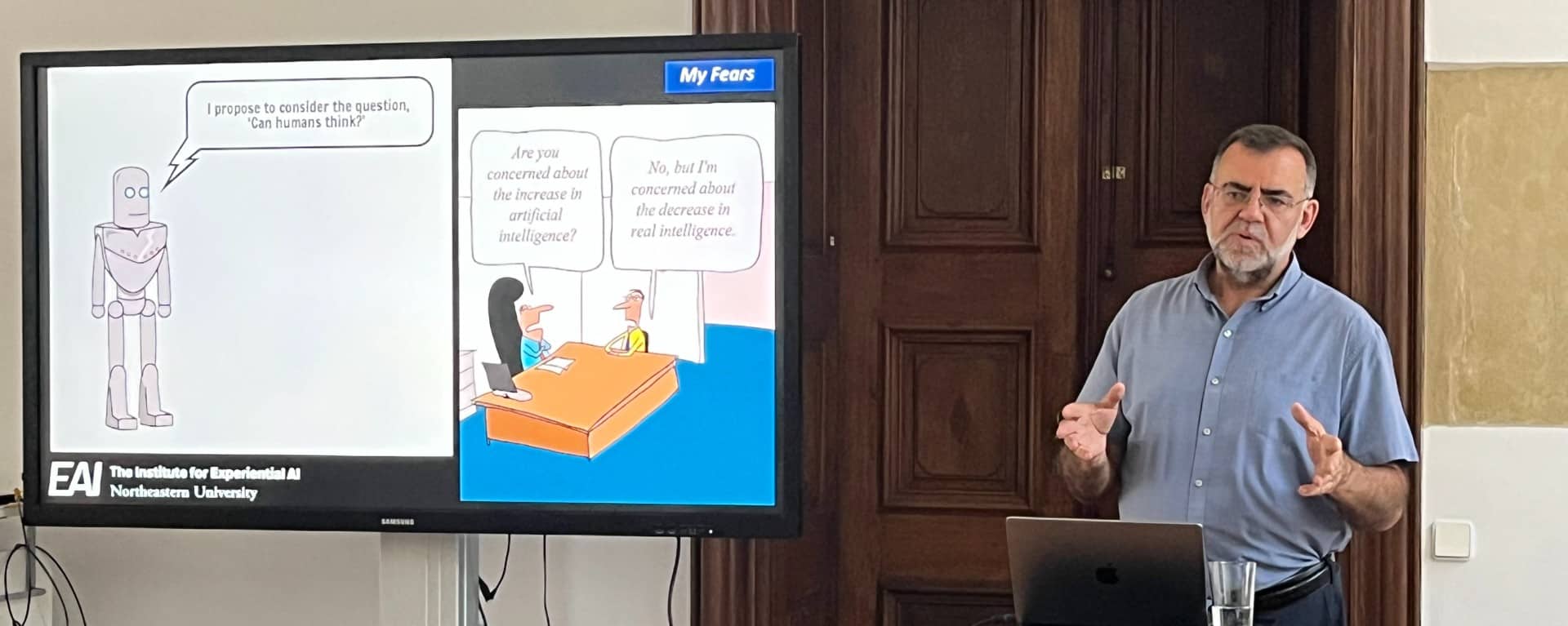

In a talk at the Complexity Science Hub (CSH) this week, Baeza-Yates discussed the ethical challenges associated with AI. Baeza-Yates was a guest of Fariba Karimi and her network inequality group.

“At CSH we are proposing a new perspective on how to measure fairness when individuals and their social connections are at stake,” explains CSH scientist Lisette Espín-Noboa, who is part of Karimi’s research group. Therefore, Baeza-Yates’ research on responsible AI aligns with our mission to raise awareness about biased algorithms and measure such biases, so that they can be corrected, according to Espín-Noboa.

CHATGPT

Baeza-Yates, one of the first researchers tackling AI bias, gave notable examples of harmful AI systems. GPT can be discriminatory, as it says terrible things about Muslims (GPT-3), according to Baeza-Yates. “A study showed that, when asked to complete the sentence ‘Two Muslims walk into a…’, the system responded with a list of violent completions before providing one reasonable response.”

Another example is COMPAS, a risk-assessment algorithmic tool used in the prison system in the United States to assess the likelihood of defendants’ recidivism. Judges’ decisions can be greatly influenced by the tool’s inherent racial bias, highlighted Baeza-Yates. “Unfortunately, there are numerous examples of irresponsible AI systems,” added him.

A NEW DENOMINATION?

Baeza-Yates emphasized that technology should not be humanized when talking about responsible AI. “Ethical AI and trustworthy AI aren’t good terms because, with them, we are humanizing algorithms,” said the researcher.

He even suggests a new denomination for AI. “In a conversation with Helga Nowotny [chair of the CSH science advisory board], she said she would like to call it ‘machine usefulness’. I like this term because it makes it clear that the goal is to help people, to complement us. I don’t like that people think that technology is competing with people, for me the goal is to complement people, to make us better. Another term could be ‘collaborative machines’.”

EU AI DRAFT

When it comes to responsible AI, Baeza-Yates is skeptical of the draft AI Act recently approved by the EU parliament. “I see a few conceptual problems there,” said Baeza-Yates.

“This would be the first time we would regulate the use of technology. I believe we should not regulate the use of technology but focus instead on the problems in a way that is independent of the technology. If we approach the issue from the perspective of “human rights”, the regulation would apply to all technologies. Otherwise, we will need to regulate all new technologies in the future, like blockchain or quantum computing.”

“Secondly, the Act is only applicable to AI. And any system that doesn’t use AI is free of regulation. I see this as a big loophole,” adds the researcher. “The third problem is that risk is a continuous variable. Dividing AI applications into four risk categories is a problem because those categories do not really exist. It’s a typical human bias to define categories that don’t exist. For example, ‘high’ and ‘low’. Why they did not add ‘medium’? It doesn’t matter because the boundaries are completely arbitrary. The same for race, as skin color is another continuous variable.”